Presentations

Posters

- Bhavan Chahal, Using deep learning tools to infer house prices from Google Street View Images [pdf,video,talky.io]

- Adam Gothorp, Inverse problems for Bayesian errors-in-variables regression models [pdf,video,talky.io]

- Florenting Goyens, Nonlinear matrix recovery [pdf,video,talky.io]

- Eric Baruch Gutierrez, An Example of Primal-Dual Methods for Nonsmooth Large-Scale Machine Learning [video,talky.io]

- Haoran Ni, Numerical Estimation of Information Measures [pdf,video,talky.io]

- Malena Sabaté, Iteratively Reweighted Flexible Krylov methods for Sparse Reconstruction [pdf,video,talky.io]

- Leah Stella, Tomographic Imaging of the SARS-COV2 [video,talky.io]

- Cathie Wells, Optimising trajectories to reduce transatlantic flight emissions [pdf,video,talky.io]

Talks

Mathematics of Neural Netowrks and Deep Learning

- Barbara Mahler, Contagion Maps for Manifold Learning

- Patrick Kidger, Universal Approximation - Transposed!, [video, arxiv]

- Haoran Ni, Numerical Estimation of Information Measures

Optimisation

- Louis Sharrock, Two-Timescale Stochastic Approximation in Continuous Time with Applications to Joint Online Parameter Estimation and Optimal Sensor Placement

- Nash Treetanthi, Uncertainty aversion in Multi-armed bandit problem

- Florentin Goyens, Nonlinear matrix recovery, [video]

- Vadim Platonov, Forward utilities and Mean-field games underrelative performance concerns

- Melanie Beckerleg, Binary Matrix Completion for Recommender Systems, with applications to Drug Discovery

Applications of machine learning in life sciences

- Tamara Grossman, Deeply Learned Spectral Total Variation Decomposition

- Lancelot Da-Costa, A global brain theory, stochastic thermodynamics and applications to autonomous behaviour, [video]

- Laura Guzmán Rincón, Outbreak detection using Bayesian hierarchical modelling and Gaussian random fields

Bayesian methods

- Henry Moss, BOSH: Bayesian Optimisation Sampled Hierarchically

- Sam Power, Accelerated Sampling on Discrete Spaces with Non-Reversible Markov Processes, [video]

- Riccardo Barbano, Quantifying Model-Uncertainty in Inverse Problems via Bayesian Deep Gradient Descent

List of abstracts

Contagion Maps for Manifold Learning

Barbara Mahler, University of Oxford

Contagion maps are a family of maps that map nodes of a network to points in a high-dimensional space, based on the activations times in a threshold contagion on the network. A point cloud that is the image of such a map reflects both the structure underlying the network and the spreading behaviour of the contagion on it. Intuitively, such a point cloud exhibits features of the network’s underlying structure if the contagion spreads along that structure, an observation which suggests contagion maps as a viable manifold-learning technique. We test contagion maps as a manifold-learning tool on several different data sets, and compare its performance to that of Isomap, one of the most well-known manifold-learning algorithms. We find that, under certain conditions, contagion maps are able to reliably detect underlying manifold structure in noisy data, when Isomap is prone to noise-induced error. This consolidates contagion maps as a technique for manifold learning.

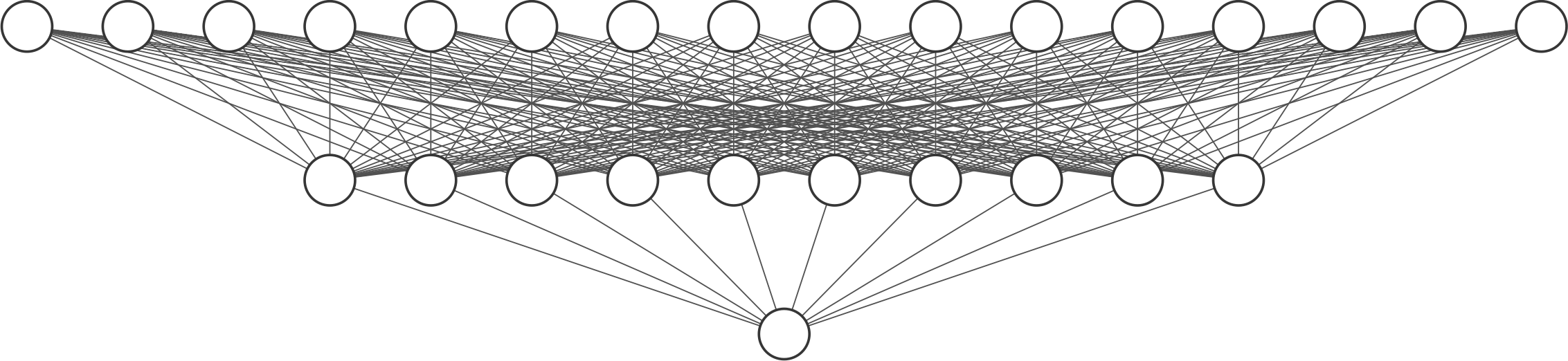

Universal Approximation - Transposed!

Patrick Kidger, University of Oxford

The classical Universal Approximation Theorem is a foundational result in the theory of neural networks, and roughly speaking states that arbitrarily wide networks can approximate any continuous function. This is often stated as one of the reasons that neural networks actually work! This motivates a natural ‘dual’ problem: what about networks of bounded width, but arbitrary depth? This dual problem is arguably closer to many of the networks that are used in practice. Here we address this problem by proving universal approximation for broad classes of activation functions, improving on previous work that applies only to the ReLU. In particular our results hold for polynomial activation functions, which is a qualitative difference to the classical version of the theorem. If time allows we will discuss extensions of this result, to nowhere differentiable activation functions, noncompact domains, and on the minimal width of network that can be achieved.

Numerical Estimation of Information Measures

Haoran Ni, University of Warwick

Entropy measures the information content of a random quantity. The closely related concept of Mutual Information (MI) between two random variables measures the reduction in uncertainty of one random variable due to information obtained from the other. As an information-theoretic quantity, MI plays an important role in many scientific disciplines. Its applications include decision trees in machine learning, independent component analysis (ICA), gene detection and expression, link prediction, topic discovery, image registration, feature selection and transformations, and channel capacity. There are theoretical and practical properties that make MI superior to other, simpler measures. At the same time, to estimate it from samples becomes a vital and difficult issue. The proposed research aims to develop efficient mutual information estimation systems with a set of estimators that produce accurate results irrespective of sample size, dimensionality, and correlation. The systems will include: (1) Improved existing estimators; (2) New approximate k-th Nearest Neighbour (kNN) Algorithms estimator; (3) New algorithms based on fast and sparse Johnson- Lindenstrauss transforms; (4) Bias correction approaches; (5) Thorough and principled theoretical analysis of the new methods.

Two-Timescale Stochastic Approximation in Continuous Time with Applications to Joint Online Parameter Estimation and Optimal Sensor Placement

Louis Sharrock, Imperial College London

In this talk, we consider the problem of joint online parameter estimation and optimal sensor placement in a partially observed diffusion process. The parameter estimation and the sensor placement are both to be performed online. We propose a solution to this problem in the form of a two-timescale stochastic gradient descent algorithm, and provide a rigorous theoretical analysis of its asymptotic properties. In particular, under suitable conditions relating to the ergodicity of the process consisting of the latent signal, the filter, and the tangent filter, we establish almost sure convergence of the online parameter estimates and the recursive optimal sensor placements to the stationary points of the asymptotic log-likelihood, and the asymptotic filter covariance, respectively. We also provide numerical examples illustrating the application of this methodology to the partially observed stochastic advection-diffusion equation.

Uncertainty aversion in Multi-armed bandit problem

Nash Treetanthi, University of Oxford

As exemplified by the Ellsberg paradox, when making decisions people often display a preference for options which carry less uncertainty. This shows ‘pessimistic’ behaviour toward uncertainty in decision. In contrast, many bandit algorithms proposes to be ‘optimistic’ toward uncertainty in order to benefit learning. This means that the designed algorithms may demonstrate the opposite decisions to the agent preferences.

To allow both learning and uncertainty aversion, we extend the classical Gittins index theorem to a robust version using the theory of nonlinear expectation. This involves studying controls determining the filtration and a model to capture consistent uncertainty, which is unaffected from the controls, together with the relaxation of Dynamic Programming Principles.

A numerical example of a binomial bandit is also considered to illustrate the interaction between an agent’s willingness to explore and uncertainty aversion arisen from the sensitivity of the statistical estimates. This shows that the preference to learn or to exploit can also be affected by the current estimate of the cost and by the precision of available estimates. The Monte-Carlo simulations also shows its benefit to make a decision when a large number of choices are available.

Nonlinear matrix recovery

Florentin Goyens, University of Oxford

We address the numerical problem of recovering a partially observed high-rank matrix whose columns obey a nonlinear structure. The structures we cover include points grouped as clusters or belonging to an algebraic variety. Using a nonlinear lifting to a space of features, we reduce the problem to a constrained non-convex optimization formulation. We use a Riemannian optimization method and an alternating minimization scheme. Both approaches have first and second order variants. These methods have theoretical convergence guarantees as well as global worst-case rates. We provide extensive numerical results for the recovery of union of subspaces and clusters under entry sampling and dense Gaussian sampling.

Forward utilities and Mean-field games underrelative performance concerns

Vadim Platonov, University of Edinburgh

We introduce the concept of mean field games for agents using Forward utilities to study a family of portfolio management problems under relative performance concerns. Under asset specialization of the fund managers, we solve the forward-utility finite player game and the forward-utility mean-field game. We study best response and equilibrium strategies in the single common stock asset and the asset specialization with common noise. As an application, we draw on the core features of the forward utility paradigm and discuss a problem of time-consistent mean-field dynamic model selection in sequential time-horizons.

Binary Matrix Completion for Recommender Systems, with applications to Drug Discovery

Melanie Beckerleg, University of Oxford

Making predictions of missing entries in large databases is useful in applications including e-commerce, online services and biomedical research. In this talk I will present my findings on the use of predictive clustering to make recommendations. The starting point is a database of interactions, say for example between drug compounds and target proteins, that are thought to cause disease. Interactions can be described in terms of user clusters (i.e. drugs in the same group have similar interaction profiles). In real world applications, many of these interactions will be unobserved. My aim is to recover not only accurate predictions of the missing entries, but also the underlying groupings. This allows us to make predictions that are interpretable as well as accurate. I will present a convex relaxation of the problem and compare against different algorithms, in terms of numerical results and theoretical guarantees for recovery.

Deeply Learned Spectral Total Variation Decomposition

Tamara Grossman, University of Cambridge

Non-linear spectral decompositions of images based on one-homogeneous functionals such as total variation have gained considerable attention. Due to their ability to extract spectral components based on size and contrast of objects, such decompositions enable image manipulation, filtering, feature transfer and image fusion among other applications. However, obtaining this decomposition involves solving multiple non-smooth optimisation problems in the classical approach and can therefore be computationally slow. In this talk, we discuss the possibility to reproduce a non-linear spectral decomposition via neural networks. Not only do we gain a computational advantage, but this approach can also be seen as a step towards studying neural networks that can decompose an image into spectral components defined by a user rather than a handcrafted functional.

A global brain theory, stochastic thermodynamics and applications to autonomous behaviour

Lancelot Da-Costa, Imperial College London

This talk 1) reviews the free energy principle, a global theory of brain function based on variational Bayesian inference. 2) We will see how we can justify the free energy principle from Langevin dynamics. 3) Finally, I will show how we can use these ideas to simulate autonomous agents in active inference, a framework similar to Bayesian reinforcement learning.

Outbreak detection using Bayesian hierarchical modelling and Gaussian random fields

Laura Guzmán Rincón, University of Warwick

Identification and investigation of outbreaks remain a high priority for health authorities. Early detection of infectious diseases facilitates interventions and prevents further transmission. For that purpose, surveillance systems collect epidemiological data from patients, including location, onset time of infection and, with the recent improvements in genotyping techniques, the whole-genome sequencing of bacteria. For outbreak detection, several statistical methods have been proposed using spatial, temporal or genetic data. However, techniques mixing genomics and epidemiological factors are still underdeveloped.This talk will describe a new approach that aims to combine diverse sources of data for outbreak detection. The methodology tackles the problem as a classification task using Bayesian hierarchical models, based on an existing spatial-temporal model proposed by Spencer et al. [Spat. Spatio-temporal Epidemiol.,2(3), 173–183 (2011)]. The talk will show that the latent parameters of the model are Gaussian random fields adapted to different types of data. Moreover, the model willbe applied to study reported cases of Campylobacter infections in two regions in the UK. Two scenarios will be explained, where a temporal-genetic and a spatial-genetic model are trained using the framework proposed. The method allows us to find potential diffuse outbreaks that could not be captured using spatial-temporal methods

BOSH: Bayesian Optimisation Sampled Hierarchically

Henry Moss, Lancaster University

Deployments of Bayesian Optimisation (BO) for functions with stochastic evaluations, such as parameter tuning via cross validation and simulation optimisation, typically optimise an average of noisy realisations of the objective function induced by a fixed collection of random seeds. However, disregarding the true objective function in this manner means that BO finds a high-precision optimum of the wrong function. To solve this problem, we propose Bayesian Optimisation by Sampling Hierarchically (BOSH), a novel BO routine pairing a hierarchical Gaussian process with a custom information-theoretic framework to generate a growing pool of seeds as the optimisation progresses. We demonstrate that BOSH provides more efficient and higher-precision optimisation than standard BO across synthetic benchmarks, simulation optimisation, reinforcement learning and hyper-parameter tuning tasks.

Accelerated Sampling on Discrete Spaces with Non-Reversible Markov Processes

Sam Power, University of Cambridge

Sampling methods for high-dimensional distributions on continuous state-spaces have come along in leaps and bounds in recent years, backed by improved theoretical results and bolstered by the widespread adoption of easy-to-use automatic differentiation packages, which enable the easy application of gradient-based methods. In contrast, simulation on discrete spaces retains a reputation for requiring bespoke, model-specific solutions, and as such, discrete models are often overlooked. In this work (joint with Jacob Vorstrup Goldman), we aim to demonstrate that sampling on discrete spaces can be treated with a similar level of generality to continuous spaces, and introduce a suite of algorithms for efficient simulation on structured discrete spaces. The algorithms build on recent developments in non-reversible, continuous-time Monte Carlo methods, and are able to out-perform previous baseline algorithms considerably in examples drawn from statistics, machine learning, physics, and beyond.

Quantifying Model-Uncertainty in Inverse Problems via Bayesian Deep Gradient Descent

Riccardo Barbano, University College London

Recent advances in reconstruction methods for inverse problems leverage powerful data-driven models, e.g., deep neural networks. These techniques have demonstrated state-of-the-art performances for several imaging tasks, but they often do not provide uncertainty on the obtained reconstructions. In this work, we develop a novel scalable data-driven knowledge-aided computational framework to quantify the model-uncertainty via Bayesian neural networks. The approach builds on and extends deep gradient descent, a recently developed greedy iterative training scheme, and recasts it within a probabilistic framework. The framework is showcased on several representative inverse imaging problems and is compared with a well-established benchmark, i.e., DnCNN.