Plenary Talks

The Mathematics of Deep Learning: Can we Open the Black Box of Deep Neural Networks? [video, slides]

Gitta Kutyniok, Technische Universität Berlin

Despite the outstanding success of deep neural networks in real-world applications, most of the related research is empirically driven and a comprehensive mathematical foundation is still missing. Regarding deep learning as a statistical learning problem, the necessary theory can be divided into the research directions of expressivity, learning, and generalization. Recently, the new direction of interpretability became important as well. In this talk, we will provide an introduction into those four research foci. We will then delve a bit deeper into the area of expressivity, namely the approximation capacity of neural network architectures as one of the most developed mathematical theories so far, and discuss some recent work. Finally, we will provide a survey about the novel and highly relevant area of interpretability, which aims at developing an understanding how a given network reaches decisions, and discuss the very first mathematically founded approach to this problem.

On Second Order Methods and Randomness [video]

Peter Richtarik, King Abdullah University of Science and Technology

We present two new remarkably simple stochastic second-order methods for minimizing the average of a very large number of sufficiently smooth and strongly convex functions. The first is a stochastic variant of Newton’s method (SN), and the second is a stochastic variant of cubically regularized Newton’s method (SCN). We establish local linear-quadratic convergence results. Unlike existing stochastic variants of second order methods, which require the evaluation of a large number of gradients and/or Hessians in each iteration to guarantee convergence, our methods do not have this shortcoming. For instance, the simplest variants of our methods in each iteration need to compute the gradient and Hessian of a single randomly selected function only. In contrast to most existing stochastic Newton and quasi-Newton methods, our approach guarantees local convergence faster than with first-order oracle and adapts to the problem’s curvature. Interestingly, our method is not unbiased, so our theory provides new intuition for designing new stochastic methods.

Hybrid mathematical and machine learning methods for solving inverse imaging problems - getting the best from both worlds [video]

Carola Schönlieb, University of Cambridge

In this talk I will discuss image analysis methods which have both a mathematical modelling and a machine learning (data-driven) component. Mathematical modelling is useful in the presence of prior information about the imaging data and relevant biomarkers, for narrowing down the search space, for highly generalizable image analysis methods, and for guaranteeing desirable properties of image analysis solutions. Machine learning on the other hand is a powerful tool for customising image analysis methods to individual data sets. Their combination is the topic of this talk, furnished with examples for image classification under minimal supervision with an application to chest x-rays and task adapted tomographic reconstruction.

Scalable approximation of integrals using non-reversible methods [video]

Alexandre Bouchard-Côté, University of British Columbia

How to approximate intractable integrals? This is an old problem which is still a pain point in many disciplines (including mine, Bayesian inference, but also statistical mechanics, computational chemistry, combinatorics, etc).

The vast majority of current work on this problem (HMC, SGLD, variational) is based on mimicking the field of optimization, in particular gradient based methods, and as a consequence focusses on Riemann integrals. This severely limits the applicability of these methods, making them inadequate to the wide range of problems requiring the full expressivity of Lebesgue integrals, for example integrals over phylogenetic tree spaces or other mixed combinatorial-continuous problems arising in networks models, record linkage and feature allocation.

I will describe novel perspectives on the problem of approximating Lebesgue integrals, coming from the nascent field of non-reversible Monte Carlo methods. In particular, I will present an adaptive, non-reversible Parallel Tempering (PT) allowing MCMC exploration of challenging problems such as single cell phylogenetic trees.

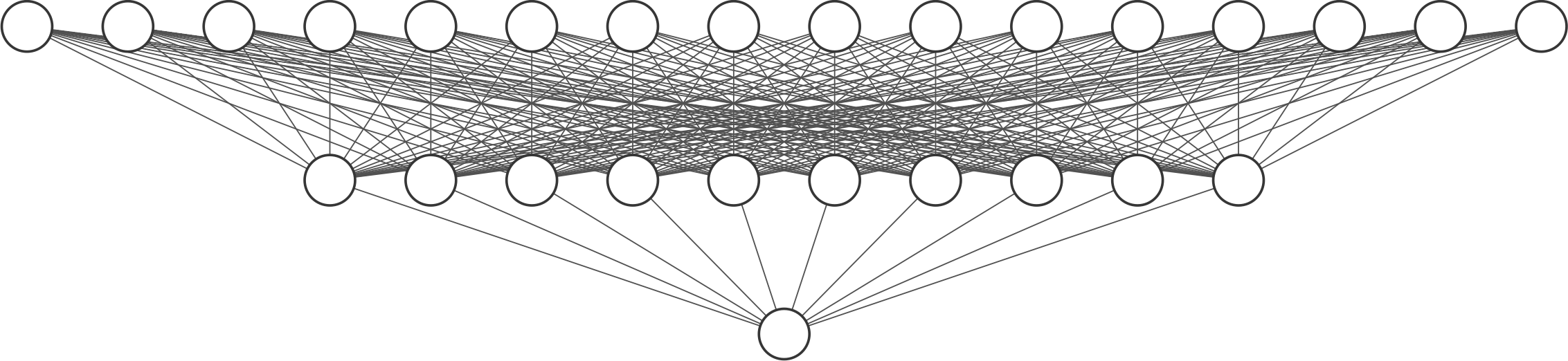

By analyzing the behaviour of PT algorithms using a novel asymptotic regime, a sharp divide emerges in the behaviour and performance of reversible versus non-reversible PT schemes: the performance of the former eventually collapses as the number of parallel cores used increases whereas non-reversible benefits from arbitrarily many available parallel cores. These theoretical results are exploited to develop an adaptive scheme approximating the optimal annealing schedule.

My group is also interested in making these advanced non-reversible Monte Carlo methods easily available to data scientists. To do so, we have designed a Bayesian modelling language to perform inference over arbitrary data types using non-reversible, highly parallel algorithms, link here.